(Credit to work done by David Cornett and Clay Leach)

Description

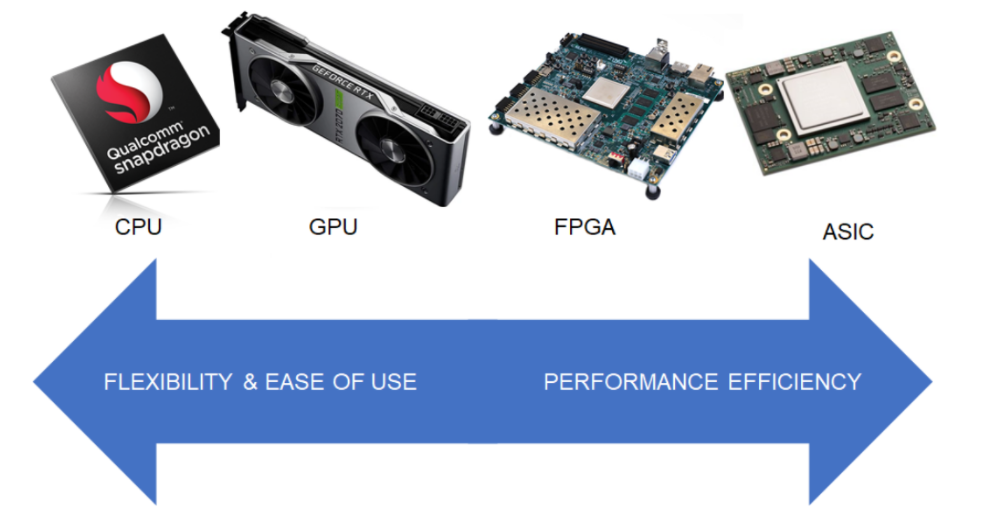

For most research-based applications of Neural Networks (NNs), the go-to compute device for various network tasks, such as inferencing and training, have been Graphics Processing Units (GPUs). This is because of their relatively high throughput and memory speeds, as well as their ability to do matrix and linear algebra much faster than a traditional CPU. All these factors enable training of NNs to be shortened drastically and the inference speeds of NNs increased to be run at real-time speeds.

The problem with traditional “discrete” GPUs is factors related to their size, weight, and power (SWaP). Discrete GPUs are typically large boards (relative to the size of a ATX case), their extensive cooling systems contribute to a very heavy device, and their aforementioned compute-power also draws a large amount of power. Because of this, they are not suited for edge-based applications.

Field Programmable Gate Arrays (FPGAs) provide an alternative hardware platform for NN inferencing at real-time speeds. This work showed compared the runtime speeds and power usage of different popular object detection and recognition networks. Because of the nature of FPGA computation and memory allocation, networks on the FPGA need to be sparse (quantized and sometimes pruned). This work also analyzed the effect of such sparsification and optimization techniques have on metric performance of the respective networks (Table 2).

| System | Physical Dimensions | Physical Weight | Maximum Power | Throughput (batchsize=1) |

| NVIDIA RTX 2070 Super | 267mm x 116mm x 35mm | 2,336g | 215W | 29 FPS |

| Xilinx ZCU104 Ultrascale + | 179mm x 149mm x 1.5mm | 1,113g | 60W | 27 FPS |

| Implementation | mean Average Precision (mAP) |

| NVIDIA RTX 2070 Super | 78.46% |

| Xilinx ZCU104 Ultrascale + | 77.4% |